Trials and tribulations of 360° video in Juno

February 25, 2024

In building Juno, a visionOS app for YouTube, a question that’s come up from users a few times is whether it supports 360° and 180° videos (for the unfamiliar, it’s an immersive video format that fully surrounds you). The short answer is no, it’s sort of a niche feature without much adoption, but for fun I wanted to take the weekend and see what I could come up with. Spoiler: it’s not really possible, but the why is kinda interesting, so I thought I’d write a post talking about it, and why it’s honestly not a big loss at this stage.

How do you even… show a 360° video?

It’s actually a lot easier than you might think. A 360° (or 180°) video isn’t some crazy format in some crazy shape, it’s just a regular, rectangular video file in appearance, but it’s been recorded and stored in that rectangle slightly warped, with the expectation that however you display it will unwarp it.

So how do you display it? Also pretty simply, you just create a hollow sphere, and you tell your graphical engine (in iOS’ case: RealityKit) to stretch the video over the inside of the sphere. Then you put the user at the center of that sphere (or half-sphere, in the case of 180° video), and bam, immersive video.

There’s always a catch

In RealityKit, you get shapes, and you get materials you can texture those shapes with. But you can’t just use anything as a texture, silly. Applying a texture to a complex 3D shape can be a pretty intensive thing to do, so RealityKit basically only wants images, or videos (a bit of an oversimplification but it holds for this example). You can’t, for instance, show a scrolling recipe list or a dynamic map of your city and stretch that over a cube. “Views” in SwiftUI and UIKit (labels, maps, lists, web views, buttons, etc.) are not able to be used as a material (yet?).

This is a big problem for us. If you don’t remember, while Juno obviously shows videos, it uses web views to accomplish this, as it’s the only way YouTube allows developers to show YouTube videos (otherwise you could, say, avoid viewing ads which YouTube doesn’t want), and I don’t want to annoy Google/YouTube.

Web views, while they show a video, are basically a portal into a video watching experience. You’re just able to see the video, you don’t have access to the underlying video directly, so you can’t apply it as a texture with RealityKit. So we can’t show it on a sphere, so we can’t do 360° video.

Unless…

Let’s get inventive

Okay, so we only have access to the video through a web view. We can see the video though, so what if we just continue to use the web player, and as it plays for the user, we took snapshots of each video frame and painted those snapshots over the sphere that surrounds the user. Do it very rapidly, say, 24 times per second, and you effectively have 24 fps video. Like a flip book!

Well, easier said than done! The first big hurdle is that when you take a snapshot of a webview (WKWebView), everything renders into an image perfectly… except the playing video. I assume this is because the video is being hardware decoded in a way that is separate from how iOS performs the capture, so it’s absent. (It’s not because of DRM or anything like that, it also occurs just for local videos on my website.)

This is fixable though, with JavaScript we can draw the HTML video element into a separate canvas, and then snapshot the canvas instead.

const video = document.querySelector('video');

const canvas = document.createElement('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

var ctx = canvas.getContext('2d');

ctx.drawImage(video, 0, 0, canvas.width, canvas.height);

video.style.display = 'none';

document.body.prepend(canvas);

Okay, now we have the video frame visible. How do we capture it? There’s a bunch of different tactics I tried for this, and I couldn’t quite get any of them to be fast enough to be able to simulate 24 FPS (in order to get 24 captured frames per second, each frame capture must be less than 42 ms). But let’s enumerate them from slowest to fastest in taking a snapshot of a 4K video frame (average of 10 runs).

CALayer render(in: CGContext)

Renders a CALayer into a CGImage.

UIGraphicsBeginImageContextWithOptions(webView.bounds.size, true, 0.0)

let context = UIGraphicsGetCurrentContext()!

webView.layer.render(in: context)

let image = UIGraphicsGetImageFromCurrentImageContext()

UIGraphicsEndImageContext()

⏱️ Time: 270 ms

Metal texture

(Code from Chris on StackOverflow)

extension UIView {

func takeTextureSnapshot(device: MTLDevice) -> MTLTexture? {

let width = Int(bounds.width)

let height = Int(bounds.height)

if let context = CGContext(data: nil, width: width, height: height,bitsPerComponent: 8, bytesPerRow: 0, space: CGColorSpaceCreateDeviceRGB(), bitmapInfo: CGImageAlphaInfo.premultipliedLast.rawValue), let data = context.data {

layer.render(in: context)

let desc = MTLTextureDescriptor.texture2DDescriptor(pixelFormat: .rgba8Unorm, width: width, height: height, mipmapped: false)

if let texture = device.makeTexture(descriptor: desc) {

texture.replace(region: MTLRegionMake2D(0, 0, width, height), mipmapLevel: 0, withBytes: data, bytesPerRow: context.bytesPerRow)

return texture

}

}

return nil

}

}

let texture = self.webView.takeTextureSnapshot(device: MTLCreateSystemDefaultDevice()!)

⏱️ Time: 250 ms (I really thought this would be faster, and maybe I’m doing something wrong, or perhaps Metal textures are hyper-efficient once created, but take a bit to create in the first place)

UIView drawHierarchy()

let rendererFormat = UIGraphicsImageRendererFormat.default()

rendererFormat.opaque = true

let renderer = UIGraphicsImageRenderer(size: webView.bounds.size, format: rendererFormat)

let image = renderer.image { context in

webView.drawHierarchy(in: webView.bounds, afterScreenUpdates: false)

}

⏱️ Time: 150 ms

JavaScript transfer

What if we relied on JavaScript to do all the heavy lifting, and had the canvas write its contents into a base64 string, and then using WebKit messageHandlers, communicate that back to Swift?

const video = document.querySelector('video');

const canvas = document.createElement('canvas');

canvas.width = video.videoWidth;

canvas.height = video.videoHeight;

var ctx = canvas.getContext('2d');

ctx.drawImage(video, 0, 0, canvas.width, canvas.height);

video.style.display = 'none';

document.body.prepend(canvas);

// 🟢 New code

const imageData = canvas.toDataURL('image/jpeg');

webkit.messageHandlers.imageHandler.postMessage(imageData);

Then convert that to UIImage.

func userContentController(_ userContentController: WKUserContentController, didReceive message: WKScriptMessage) {

if message.name == "imageHandler", let dataURLString = message.body as? String {

let image = convertToUIImage(dataURLString)

}

}

private func convertToUIImage(_ dataURLString: String) -> UIImage {

let dataURL = URL(string: dataURLString)!

let data = try! Data(contentsOf: dataURL)

return UIImage(data: data)!

}

⏱️ Time: 130 ms

WKWebView takeSnapshot()

webView.takeSnapshot(with: nil) { image, error in

self.image = image

}

⏱️ Time: 70 ms

Test results

As you can see, the best of the best got to about 14 frames per second, which isn’t quite up to video playback level. Close, but not quite. I’m out of ideas.

There were some interesting suggestions to use the WebCodecs VideoFrame API, or an OffscreenCanvas, but maybe due to my lack of experience with JavaScript I couldn’t get them meaningfully faster than the above JavaScript code with a normal canvas.

If you have another idea, that you get working, I’d love to hear it.

Why not just get the direct video file then?

There’s two good answers to this question.

First, the obvious one, Google/YouTube doesn’t like this. If you get the direct video URL, you can circumvent ads, which they’re not a fan of. I want Juno to happily exist as an amazing visionOS experience for YouTube, and Google requests you do so through the web player, and I think I can build an awesome app with that so that’s fine by me. 360° video is a small feature and I don’t think it’s worth getting in trouble over.

Secondly, having access to the direct video still wouldn’t do you any good. Why? Codecs.

Battle of the codecs

Quick preamble. For years, pretty much all web video was H264. Easy. It’s a format that compresses video to a smaller file size while still keeping a good amount of detail. The act of uncompressing it is a little intensive (think, unzipping a big zip file), so computers have dedicated chips specifically built to do this mega fast. You can do it without these, purely in software, but it takes longer and consumes more power, so not ideal.

Time went on, videos got bigger, and the search for something that compresses video even better than H264 started (and without licensing fees). The creatively named H265 (aka HEVC) was born, and Apple uses it a bunch (it still has licensing fees, however). Google went in a different direction and developed VP9 and made it royalty-free, though there were still concerns around patents. These formats can produce video files that are half the size of H264 but with the same visual quality.

Apple added an efficient H265 hardware decoder to the iPhone 7 back in 2016, but to my knowledge VP9 decoding is done completely in software to this day and just relies on the raw power and efficiency of Apple’s CPUs.

Google wanted to use their own VP9 format, and for 4K videos and above, only VP9 is available, no H264.

Okay, and?

So if we want to play back a 4K YouTube video on our iOS device, we’re looking at a VP9 video plain and simple. The catch is, you cannot play VP9 videos on iOS unless you’re granted a special entitlement by Apple. The YouTube app has this special entitlement, called com.apple.developer.coremedia.allow-alternate-video-decoder-selection, and so does Safari (and presumably other large video companies like Twitch, Netflix, etc.)

But given that I cannot find any official documentation on that entitlement from Apple, safe to say it’s not an entitlement you or I are going to be able to get, so we cannot play back VP9 video, meaning we cannot play back 4K YouTube videos. Your guess is as good as mine why, maybe it’s very complex to implement if there’s indeed not a native hardware decoder, so Apple doesn’t like giving it out. So if you want 4K YouTube, you’re looking at either a web view or the YouTube app.

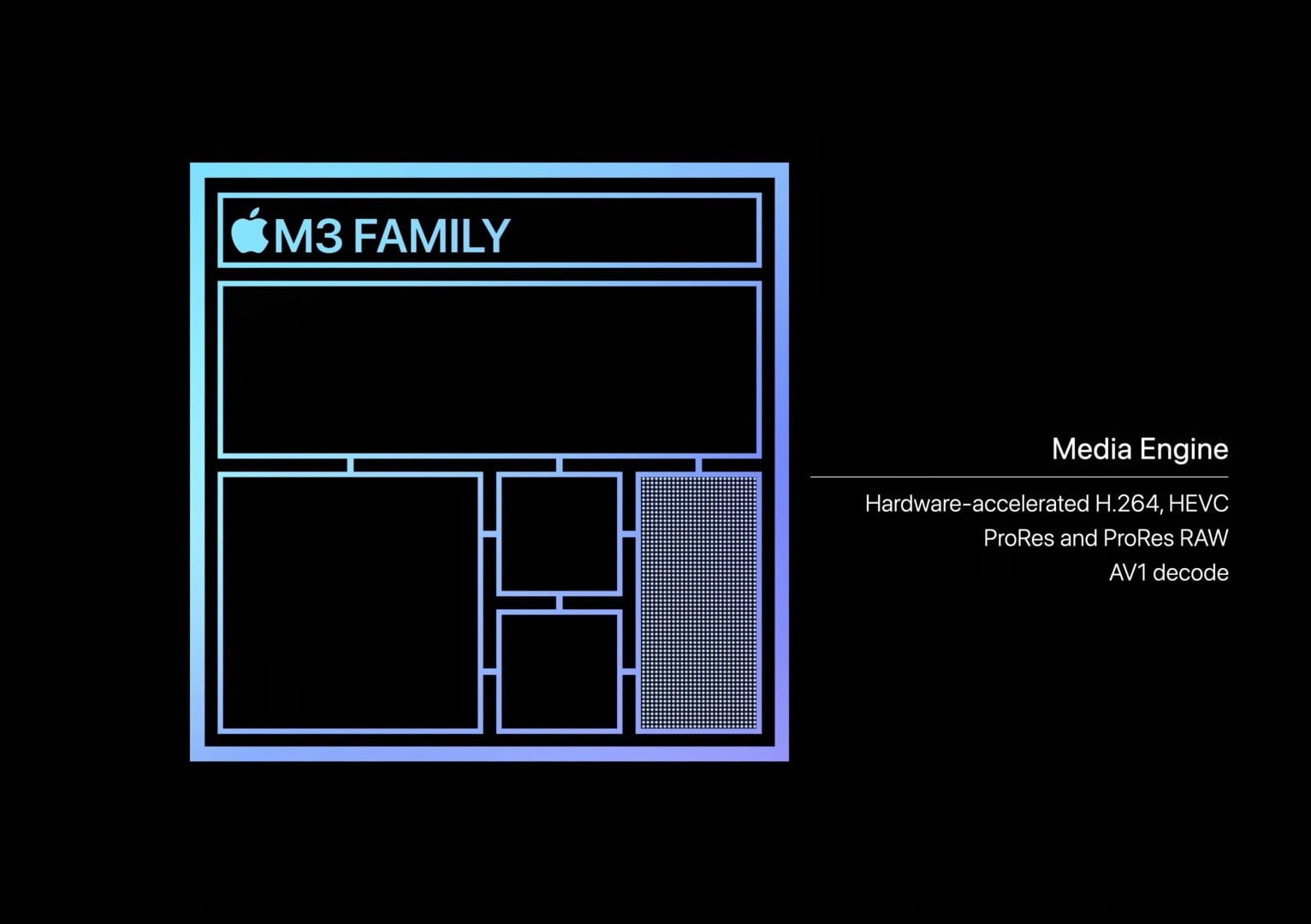

Sidebar: the new AV1 codec

Given that no one could agree on a video format, everyone went back to the drawing board, formed a collective group called the Alliance for Open Media (has Google, Apple, Samsung, Netflix, etc.), and authored the AV1 codec, hopefully creating the one video format to rule them all, with no licensing fees and hopefully no patent issues.

Google uses this on YouTube, and Apple even added a hardware decoder for AV1 in their latest A17 and M3 chips. This means on my iPhone 15 Pro I can play back an AV1 video in iOS’ AVPlayer like butter.

Buuuuttttt, the Apple Vision Pro ships with an M2, which has no such hardware decoder.

Why it’s not a big loss

So the tl;dr so far is YouTube uses the VP9 codec for 4K YouTube, and unless you’re special, you can’t playback VP9 video directly, which we need to do to be able to project it onto a sphere. Why not just do 1080p video?

Because even 4K video looks bad in 360 degrees.

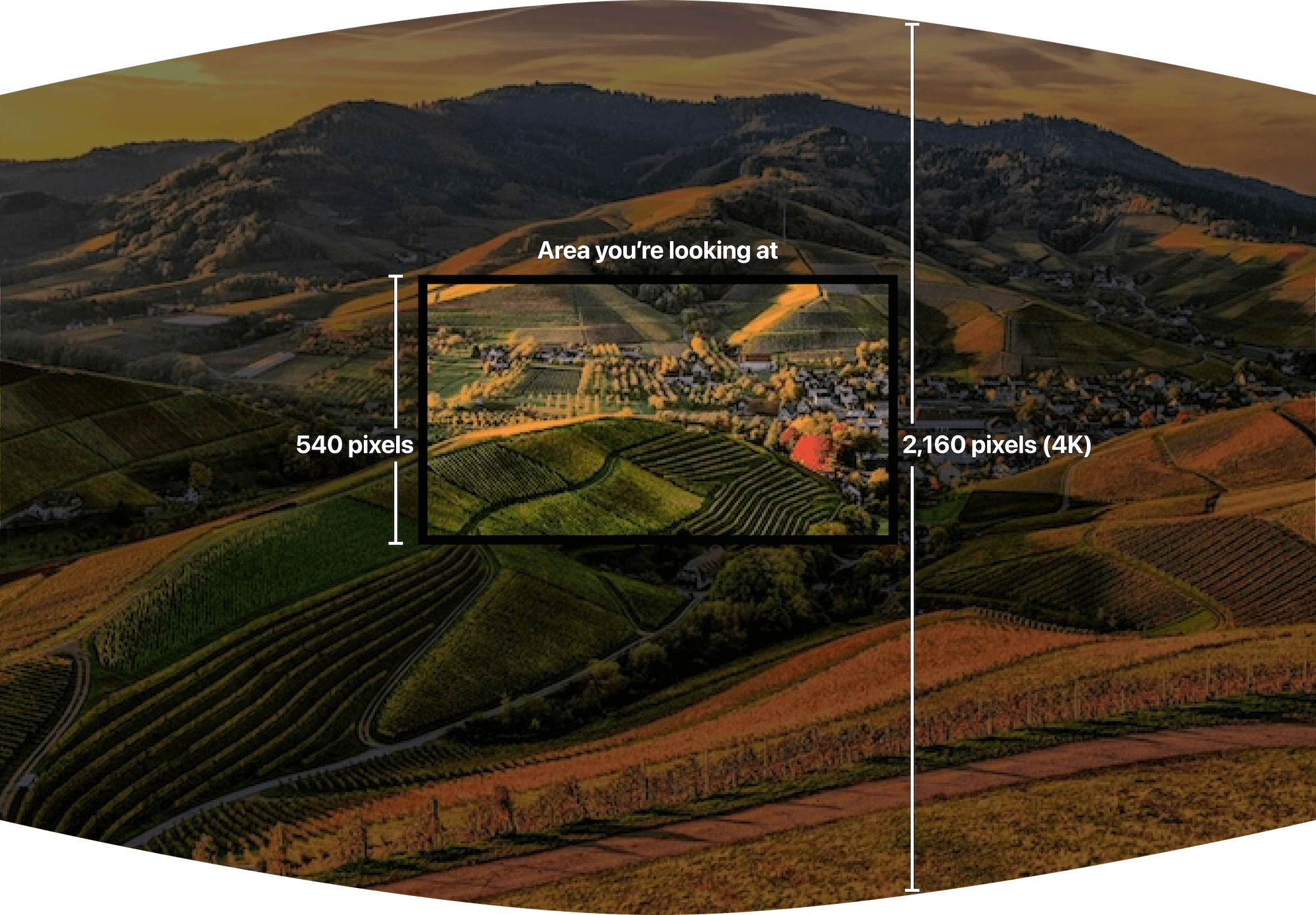

Wait, what? Yeah, 4K looks incredible on the big TV in front of you, but you have to remember for 360° video, that same resolution is completely surrounding you. At any given point, the area you’re looking at is a small subset of the full resolution! In other words, the Vision Pro’s resolution is 4K per eye, meaning any area you look can show a 4K image, and when you stretch a 4K video all around you, everywhere you look is not 4K. Almost like the Vision Pro’s resolution per eye drops enormously. If you’re familiar with the pixels per degree (PPD) measurement for VR headsets, 4K immersive video has a quite bad PPD measurement.

To test this further, I downloaded a 4K 360° video and projected it onto a sphere. The video is stretched from my feet to over my head. When I look straight, I’d say I’m looking at maybe 25% of the total height of the video. That means in a 4K video, which is 2,160 pixels tall, I can see maybe 25% of those pixels, or 540 pixels, so it looks a bit better than a 480p video but far from even 720p.

Quick attempted visualization, showing the area you look at with a 4K TV:

Versus the area you look at a 4K 360° video:

So in short, it might be 4K, but it’s stretched over a far more massive area than you’re used to when you think about 4K. Imagine your 4K TV is the size of your wall and you’re watching it from a foot away, it’d be immersive, but much less sharp. That means in reality it only looks a bit better than 480p or so.

So while it’d be cool to have 4K 360° video in Juno, I don’t think it looks good enough that it’s that compelling an experience.

Enter 8K

For the demo videos on the Apple Vision Pro (and the videos they show at the Apple Store), those are recorded in 8K, which gives you twice as many vertical and horizontal pixels to work with, and it levels up the experience a ton. Apple wasn’t flexing here, I’d say 8K is the minimum you want for a compelling, immersive video experience.

And YouTube does have 8K, 360° videos! They’re rare since the hardware to record that isn’t cheap, but they are available. And pretty cool!

But if I was a betting man, I doubt that’s ever coming to the first generation Vision Pro.

Why? As mentioned 8K video on YouTube is only available in VP9 and AV1. The Vision Pro does not have a hardware AV1 decoder as it has an M2 not an M3, so it would have to do it in software. Testing on my M1 Pro MacBook Pro, which seems to Geekbench similarly to the Vision Pro, trying to playback 8K 360° video in Chrome is quite choppy and absolutely hammers my CPU. Apple’s chips may be powerful enough to grunt through 4K without a hardware decoder, but it doesn’t seem you can brute force 8K without a hardware decoder.

Maybe I’m wrong or missing something, or Google works with Apple and re-encodes their 360° videos in a specific, Vision-Pro-only H265 format, but I’m not too hopeful that this generation of the product, without an M3, will have 8K 360° YouTube playback. That admittedly is an area the Quest 3 has the Vision Pro beat, in that its Qualcomm chip has an AV1 decoder.

Does this mean the Vision Pro is a failure and we’ll never see 8K immersive video? Not at all, you could do it in a different codec, Apple has already shown it’s possible, I’m just not too hopeful for YouTube videos at this stage.

In Conclusion

As a developer, playing back the codec YouTube uses for its 4K video does not appear possible. It also doesn’t seem possible to snapshot frames fast enough to project it in realtime 3D. And even if it was, 4K video does not look too great unfortunately, ideally you want 8K, which seems even less likely.

But dang, it was a fun weekend learning and trying things. If you manage to figure out something that I was unable to, tell me on Mastodon or Twitter, and I’ll name the 3D Theater in Juno after you. 😛 In the meantime, I’m going to finish up Juno 1.2!

Additonal thanks to: Khaos Tian, Arthur Schiller, Drew Olbrich, Seb Vidal, Sanjeet Suhag, Eric Provencher, and others! ❤️