Rivian R2 wishes as an R1 owner

February 9, 2026

After 7 years with a Tesla Model 3, we picked up a gen 2 Rivian R1S in April of 2025. We still have the Model 3 as a second vehicle, but it’s been really cool experiencing a new electric vehicle from a very passionate new company.

2026 is a really exciting time for Rivian, as in the first half of this year they’re launching their R2 vehicle - a smaller, less expensive SUV offering that should have a lot more mass-market appeal.

With a bunch of jourrnalists getting previews of the vehicle today, I thought I’d share what I’m really hoping for in this new vehicle having experienced their existing vehicle for almost a year, and a Tesla for the better part of a decade. None of these are in any particular order.

Better audio

We sprung for the “Premium Audio” package in our R1S and… it’s not premium at all. Whatever system just came with our Model 3 sounds demonstrably better, from where it feels like the sound is coming from (much more expansive) to the bass, the R1S just feels a lot weaker. Honestly for the base audio system it would be probably decent, but for a “Premium Audio” system it just falls short. They have been making it better and better with softwware updates of all things over the course of our vehicle’s life, where it’s noticeable better now than it was at the beginning, a recent update in December basically sounds like they figured out how to turn on the subwoofer in the trunk a little bit, but it’s still just not that much.

With the Tesla sometimes I’d park and finish out a song before leaving the car because it just sounded so good, have never had that happen in the Rivian so I hope they bring some of that experience to the R2 even through another “Premium Audio” package for those who care.

No dual motor EPA shenanigans

So our R1S is dual motor, meaning it has a motor for the front wheels and a separate motor for the back wheels, meaning you get AWD. Rivian allows you to get more range by only using the front motor, turning it into effectively a front wheel drive vehicle and as only one motor is active you get a bit better range (about 10%). Sounds great, right? Choose between more efficient front-wheel drive on trips, but just use AWD around town by default.

Well, the devil is in the details. In order to be able to market this slightly improved range, the EPA requires Rivian to automatically revert back to this front wheel drive/higher efficiency mode after a few hours. Kinda like gas vehicles and how they turn off the engine at traffic lights, and if you disable that it just keeps turning itself back on. So even if it’s the winter and you’re like, “Dang, roads are a little dicey, I want to be in AWD”, if you park the car for awhile and forget to set it to AWD, it just reverts to front wheel drive. It’s like if your iPhone kept reverting to “Low Battery Mode” every 2 hours even after you keep toggling it off so Apple could advertise that model having 10% better battery life.

Note: the vehicle isn’t hard-locked to front wheel drive in this mode, if it slips it alerts you that it’s switching over to AWD (I don’t want to wait for it to slip), and if you floor it on the highway for instance it’ll engage the rear motors for extra grunt, but because it has to link up the rear motors to an already moving system, you can sometimes get a kinda weird clunk feeling as the rear motor connects itself at speed which isn’t very satisfying. It almost feels like a wheel slip in the rain.

This is maddening, and is only the case on their dual motor vehicles. For tri and quad motor vehicles, they just don’t market them as having that extra 10% range, so all the modes are actually sticky! If you say “front wheel drive mode” (they call it “Conserve”) it stays there indefinitely, if you say “AWD mode”, it stays there indefinitely.

Is this dumb? Yes. Do I blame the EPA? Yes. Do I also blame Rivian? Also yes, they’re making this trade off to be able to market extra range.

If Rivian does this same stuff for the R2 dual motor just so they can advertise a few extra miles, I really hope they have a $10 option you can configure when you order called “Give me less marketed range with no actual range decrease but have the vehicle actually do what I tell it to”, but maybe with a catchier title.

Spare compact tire

Our Tesla lacks a spare tire of any sort, instead electing to include a repair method and roadside assistance. A few years back a nasty pothole absolutely destroyed the sidewall of one of our tires on a drive home, and where it was sidewall damage it simply wasn’t repairable, so we had to call Tesla roadside assistance. Unfortuantely Tesla roadside assistance was absolutely useless, taking ages to respond and then ultimately not having any providers in the area, so we just ditched the car and got a ride home with a friend and dealt with it the next day.

After that I was like “I do not want another vehicle without a spare tire on board”.

With our R1S on the other hand, we had another unlucky event where we popped a tire (also the sidewall if you’d believe it, I have some great luck) and sure enough, since we elected to get a compact spare tire it was super easy to deal with, just grab the included jack, throw the compact spare on, then the next day we just slowly drove over to a shop for a new tire. Completely uneventful.

I was worried with the smaller body that you wouldn’t be able to in the R2, but Jerry Rig Everything showed room for a compact spare in the sub trunk, nice!

Digital rear view mirror

I don’t see this in any of the videos so it seems unlikely but I’m holding out hope it’s an option.

Picture this: you approach your vehicle, since it sees it’s your phone it knows who you are, so it positions your seat, steering wheel, mirrors, Apple Music/Spotify account, and temperature preferences. You didn’t have to do a thing, the vehicle is just smart! Except… your spouse is 7 inches shorter than you so when you look in the rear view mirror you’re staring at the back seat.

Is having to adjust your rear view mirror a big deal? No, but having the vehicle do everything else for you almost draws more attention to the final thing it’s missing that you still have to adjust every single time. This is a solved problem in inexpensive vehicles, just have a “digital rear view mirror” that requires no adjusting as it just shows a camera feed of the rear of the vehicle where the mirror is.

Rivian’s cameras are legitimately so good, that at night it’s easier to use them for side blind spot monitoring when you change lanes than the actual side mirrors, because they let in enough light that you can actually make out details through the pseudo “Night Mode” camera vision that you can’t with the mirrors alone, it’s wild. I want that for the rear view mirror too!

Do you still prefer an analog mirror? That’s totally cool, all the vehicles that offer this let you just toggle back to a good ol’ reflective mirror. No harm no foul.

V2H story

Not a lot of people realize one of the most powerful part of EVs: they’re mobile powerstations that can (theoretically) power your entire home. Take a Tesla Model 3 for instance, it has a 75 kWh battery. Tesla also sells Powerwalls to help you back up your home, each Powerwall is around $6,000 and has 13.5 kWh of battery capacity. Yes, that means your Model 3 is the equivalent of more than 5 Powerwalls, or $33,000 in equivalent batteries.

That’s nuts! Ever have a power outage? With the average home in Canada using about 30 kWh per day, that could power your house through potentially multiple days and genuinely save lives.

My Rivian R1S is a lot better than my Tesla here in that it actually has normal, 120V AC outlets, but they can only output a measly 1.5 kW, so even powering a hungry kettle could result in the breaker tripping. Much better than the max 120W my Tesla can do through a 12V cigarette outlet (good god how is that the best they can do), but it’s still not enough output to power a house effectively.

It’s kinda like being in a drought with a massive water tower, but water only comes out in drips. Better than nothing, but we need output speed too.

The R1 can output much more by pulling DC energy directly from the battery through CCS protocols like the ISO 15118 standard, and sure enough despite Rivian not talnking about it, folks with these systems have been able to connect the R1S directly to their house with the appropriate cable and send up to 24 kW to power their entire home, crazy stuff, hope Rivian talks about this more in the future as clearly the vehicle supports it even if they are quiet about it.

I’m kinda curious what the R2’s story is here. The CEO of Rivian said in an interview (~1:20:20) that the R1 and R2 both have bidirectional EV charging in the realm of 20 kW (which again we’re finally seeing folks be able to take advantage of in the R1 recently), but unlike being limited to 1.5 kW of AC output in the R1, the CEO says the R2 will be able to do “10 or 11 kW”.

That’s massive versus the 1.5 kW the R1 can do, but I’m not sure that made it to production in the end? Doug Demuro showed a Rivian graphic with a V2L adapter (at 26:22) but it’s only listed as 2.4 kW. Still a lot better than 1.5, but a far cry fro the 10 or 11 kW that RJ Scaringe said earlier.

Either way, talk more about this Rivian! This is one of the coolest parts about EVs!

Better suspension

The R1S has a fancy air suspension, so picture a bagpipe over each corner of the vehicle that lets it inflate or deflate to change the height of the vehicle and theoretically make for a cushier ride.

I say theoretically because honestly I find the gen 2 R1S kinda rough suspension wise. Like, hitting the same potholes around where I live in the Tesla Model 3 (with a much simpler coil suspension, no bagpipes) versus the Rivian R1S I honestly find the Tesla makes me wince less. I thought it might have been the massive 22" wheels that came on the Rivian and the correspondingly small sidewall on the tire, but we switched to a 20" wheel for the winter with a much larger sidewall and it’s better but still not great.

Would love to see Rivian tune this so that the R2 is a super smooth ride, I’ve heard the newer Tesla Model Ys are incredible here and also just have a coil suspension like the R2 will have.

Faster charging

Brands love to brag about maximum charge speeds, our Tesla for instance got a software update to enable 250 kW charging, which is super fast. To put that in perspective, most new homes in North America have 200 amp service going into their home, 250 kW is the equivalent of upgrading to 1,000 amp service, and then pouring every drop of that power into your car.

But, while that top speed is impressive, it holds that for like, minutes maybe before crashing down to much slower charging speeds. Our R1S is no different here, with good peak speeds but it doesn’t exactly hold them super well, there’s been tons of reports that the cooling method for the batteries in the R1S is just underwhelming, where the Rivian R1 models have a two battery packs stacked on top of each other with a single liquid cooling plate in the middle (so only the top or the bottom of the battery is touching the cooling surface), and that single plate often seems a little underpowered for cooling such a massive pack quickly. Kinda like thermal throttling in laptops! We typically see around 45 minutes for the battery to charge 10-80% at fast chargers.

The R2 on the other hand appears to be moving to a smarter cooling method where the cooling liquid flows almost through a ribbon, weaving along the sides of each cell in the battery pack, meaning a lot more cooling surface area.

And indeed, this seems to have paid off, with a Rivian employee saying they’re now under 30 minutes for 10-80% on the R2. Class-leading? No, Hyundai is under 20 minutes, but a fair bit better.

And for folks without EV experience, honestly, this is mostly a non-issue. 99.5% of your charging is done at home where speeds don’t matter (it just charges overnight like your phone), it’s only if you’re going on a bit of a roadtrip where this comes into play.

Better USB-C

Okay this isn’t a big deal, but the R1S has a ton of USB-C ports everywhere, like there must be close to a dozen, which is awesome, but if you try to charge a power bank or a laptop or something over USB-C it’s just… not very fast. I haven’t actually measured but I’m assuming they’re only doing 12V (at most) instead of at least 20V which most chargers are at nowadays, which I really hope they improve. My MacBook charges so slow.

Better phone charger

This is the one part of the Rivian that I’m like “how did this even make it out of the factory”. And if you’d believe it the phone charger in my vehicle is the second revision, so this is their attempt at fixing it somehow.

Basically they have a flat little area near the arm rests where you can place one or two phones to have them wirelessly charge. Sounds fine, right?

I haven’t measured it, but from experience I believe the charging “sweet spot” is approximately 4 atoms wide. If the car is in motion at all, it moves from those four atoms and tries super hard to charge it but ultimately cannot, and just making the phone get super hot and lose a bunch of battery life.

One time we went camping and I was like “Okay, the vehicle is not moving, surely I can just set it here while I sleep and it’ll charge”. Nope, somehow even stationary the phone did not charge beyond 20% overnight and was super hot in the morning. What.

They have managed to make a phone charger that is worse than not having one at all. I can’t even place my phone in the arm rest while I drive because it just cooks it, at least if nothing was there it could just be a storage location.

No, mine is not broken, this is a common complaint from just about every Rivian owner, and they need to make this better for the R2. Just hot glue a MagSafe puck in there at minimum.

Better Phone as a Key (PAAK)

Rivian and Tesla do the (honestly very cool thing) where it uses your phone to detect your proximity to the car and lock/unlock it and recognize who the driver is to set preferences. It’s great, no having to carry around bulky car keys (don’t worry, there is a backup little credit card style key you carry in your pocket in case your phone dies or disappears).

Rivian even recently updated theirs to use the first-party “Apple CarKey” functionality so you get bonuses like being able to unlock it for a few hours even after your phone’s battery died, and it uses “Ultra Wide Band” (UWB) so it can position your phone in relation to the car down to the centimeter. Tesla doesn’t do this and has their own proprietary thing that I think is based on Bluetooth but might use a bit of UWB on newer models (not on my car).

But… Tesla’s is still much better. There’s two aspects of nailing good “phone as a key” support: firstly, unlocking the car as you approach (duh), and secondly, knowing who approached the car so you can set their preferences (seat, steering wheel, mirror positions, temperature preferences, music streaming account, etc.)

- Unlocking: B+ for Rivian here, sometimes even with the recent update I have to stand by the door for a few seconds and be like “Um, hello” before it sees me there and unlocks. Same phone, walk over to my Tesla, always unlocks instantly even though the Rivian should have a massive advantage with UWB.

- Identifying driver: D for Rivian here. Again, with UWB and centimeter-level positioning over the driver in relation to the vehicle Rivian should be able to know exactly who is approaching the driver door when my girlfriend and I (who both have Rivian keys) approach the vehicle, but 95% of the time when my girlfriend is with me (despite her always preferring to be passenger) it always sets her as the driver. Even weirder, sometimes she’ll yell out the window if I’m putting something in the frunk “Oh it actually recognized you this time!” with me set as the profile, but then when I sit down in the seat it reverts to her. What.

Rivian needs to do better here for the R2. The pain is especially compounded by the fact that if you leave in a bit of a rush and somehow don’t realize the driver profile is wrong, Rivian won’t let you change your driver settings unless you slow down to under 3 mph, so you better get ready to pull over and stop the car if you need to adjust something. With Tesla you can always just swap profile and have your steering wheel, seat, mirrors, etc. move to where they should be, and that feels a lot safer than having to muck around with changing things via a touch screen or sit their tweaking controls on the side of the car seat.

Smoother software

Somehow despite my Model 3 being a 2018 model and probably running a Raspberry Pi compared to the hardware Rivian runs, moving around the OS in the Tesla still feels faster. With the Rivian there’s still lag just bouncing around screens with stuff sometimes taking a second or two to show up. I don’t get it.

I really hope this improves on the R2, and thankfully it looks like it does, Doug DeMuro bounces around the R2’s UI here and it’s much faster than my gen 2 R1S with everything loading virtually instantly. Yay.

CarPlay

I’ve never had a vehicle with CarPlay, we rented a vehicle with it and it was kinda neat but Teslas and Rivians already have like every piece of software I’d want when interfacing with a vehcile (good, traffic-based maps and popular music streaming services), so with the exception of Overcast I don’t personally care about CarPlay at all.

That being said, I know a ton of people do so I kinda hope Rivian looks into it even just as a little windowed experience because I think it would make a lot more people interested.

Alexa is so bad

About a month ago at their Autonomy Day Rivian previewed (among many other cool things) their new “Rivian Assistant”.

This is sorely needed, their current system uses Alexa and. it. is. so. bad.

With our Tesla, you can say “Navigate to Blah” and it will just automatically plot it and you’re off to the races.

Best case with Rivian you’re like, “Alexa, navigate to Bob’s Cool Donuts in Dartmouth”, and Alexa is like “Would you like to navigate to Bob’s Cool Donuts, Dartmouth, Nova Scotia”, “Yes” where it repeats literally the only possible match back to you requiring confirmation every time instead of just… taking you there.

A more typical case is “Alexa, navigate to Bob’s Donuts in Dartmouth”, “Would you like directions to Bob’s Donuts in Toledo, Ohio”. No Alexa, I want the one that’s a five minute drive, not the one a three day drive away. “Oh, okay, try being more specific next time”

Better heat pump

They do a decent job isolating the sound from the outside if you’re in the vehicle, but if it’s cold outside and you heat up your Rivian R1S, you can hear that sucker from a full block away in this super high pitched whine. I’ve understandably had multiple people ask “Is it okay?”.

Better time placement

The UX for knowing the current time in the cabin is not great. There is no clock on the main driver instrument display, and it’s in the furthest place possible on the middle display, so it’s not exactly easy to check. There’s tons of space on the driver display so I either wish they shoved it there, or if you put in directions, I wish it showed you the current time there. Right now it just says “ETA 2:58 PM” which if you’ve been driving for 45 minutes and kinda have lost track of time is not particularly helpful, that could be in 5 minutes or in 25 minutes for all I know.

It looks a bit better on the R2 (seen in MKBHD’s video) where they’re placing it on the middle display a lot closer to the driver, but I still wish they just put it on the driver display.

Better second row release

The R1S and many other modern vehicles have really stupid and downright dangerous second row releases, where the main door handles are electric, so it there’s an electrical issue and you need to get out of the vehicle, you obviously need a manual release. On my Tesla, there’s a (shocker) pull handle that is obvious and you can just pull to get out of the vehicle. Easy peasy (don’t worry Tesla has since changed to an inexplicably stupider, hidden design like Rivian)

The R1 is incredibly stupid and you literally have to pop off trim in the rear door to get access to a cable you can yank. Yeah, good luck remembering that in dire circumstances, so I threw one of those window breakers in the back cubby.

The R2 is better here, Jerry Rig Everything shows a little button patch you can pop off much easier to get to the cable, but still, just put the manual release that the front doors have, this is a basic safety feature and should not be complicated.

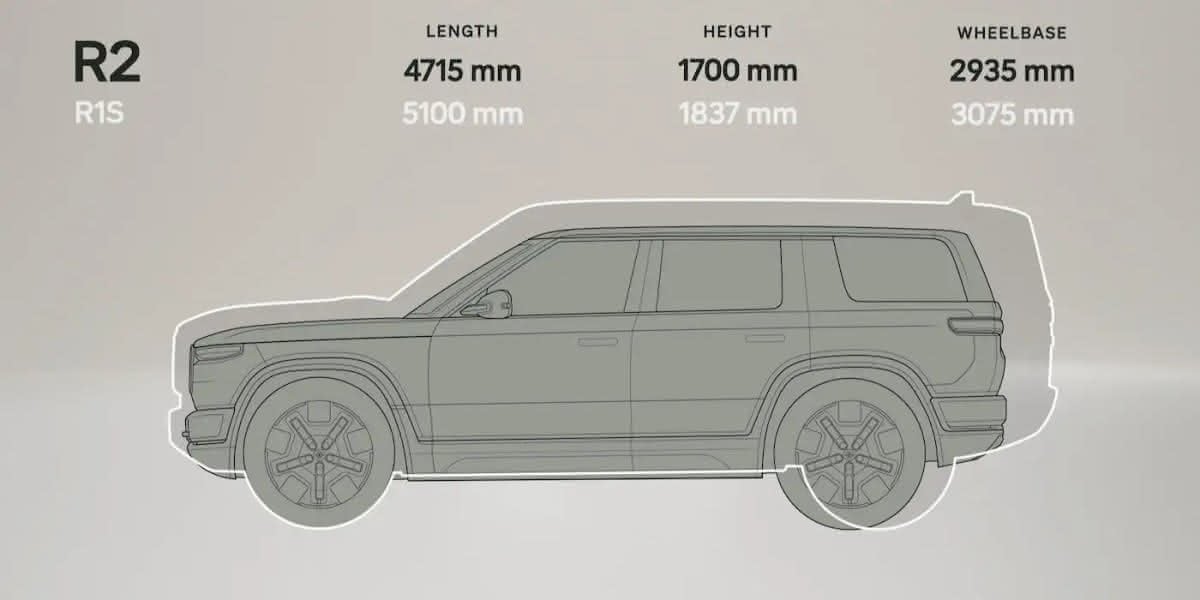

Smaller

This we know will be the case, with the Rivian R2 being about 2 feet shorter than the R1S.

The R1S is a properly large vehicle, which makes it very capable, but I dunno, I do find myself wishing it was a little smaller quite often, so I honestly think if the R2 is compelling enough I might be trading in my R1.

… Does this sound negative?

Reading it back a bit, this post sounds a wee bit negative even though the intention is just to talk about things I hope they improve.

So just to be totally clear, I love the thing. It’s spacious, incredibly capable, looks great, has amazing range, and is just a lot of fun to drive with a ton of creature comforts that I miss every time I drive the Tesla. But there’s always room for improvement!

I’m so excited

I genuinely think Rivian is doing such cool things, and the company behind it seems to have a real passion for building cool products instead of just sitting on Twitter all day, so I’m super excited to see what this mass-market vehicle does for them and I’m hoping for the best.

Looks like they’re launching in spring (I guessed summer!) with more pricing and configuration details March 12th.